How would you imagine teaching professors, long-term researchers, and leaders of academic institutions about using monitoring and evaluation (M&E) for change? How would you teach them M&E or influence them to consider it as a certificate, postgraduate, or Master’s course to create a multiplier effect? This was Cloneshouse’s mission in Kigali, Rwanda with the Capacity Consulting Africa team. I facilitated sessions on M&E for change for four days with 45 leaders from Nigerian universities.

According to Evaluation for Agenda 2030 (ref. pg 73), universities play a crucial role in disseminating knowledge about evaluation and evaluation methods, especially when policy guidance encourages the evaluation of all projects and programs. Does Nigeria have such a policy? Absolutely! Are Nigerian Universities offering M&E as a certificate, postgraduate degree, or master’s course? I’d say NO. In this piece, I reflect on three cross-cutting themes from my conversations with the leadership of higher institutions in Nigeria.

Stakeholder Engagement

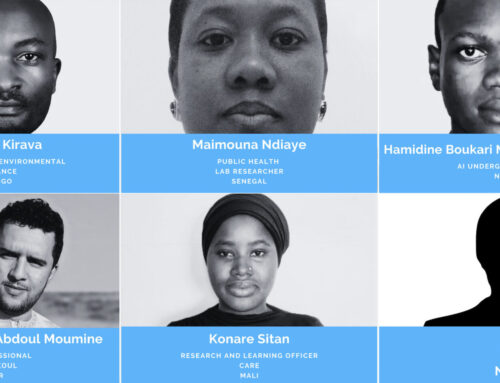

Participants are taken through how to develop a data collection plan

Some workshop participants mentioned that they weren’t adequately consulted about programs and projects for international partners and Nigerian government initiatives. They felt betrayed, especially when called upon to verify ongoing programs independently. So, does programming around international development interventions affect how monitoring and evaluation are perceived? I’d say yes. As one participant complained:

“Some of us here participate in independent verifications and we see certain things that were wrong in the conceptualization of those programs. The programming error makes us wonder why there were verifications when things were wrong from the start.”

But is it true that stakeholders are not being engaged? Or is it the extent of the engagement? Or perhaps the resources available for engaging stakeholders? Looking inward, I have participated in and facilitated some stakeholder engagements, especially at the conceptualization stage of programs. Anecdotally, it might be the extent of engagement. But what resources are available to reach millions of stakeholders? A glance at World Bank project documents shows that some forms of stakeholder engagement exist in some projects’ stakeholder engagement plans. But is that enough?

Data Quality

The Nigeria Data Protection Law is a game-changer for data reliability, but does it address other data quality dimensions? I have written about issues with data quality in Nigeria and argued how it has been politicized at various levels of government, including individual and organizational levels. Participants were concerned about how much they could influence data gathering and collection activities.

“Nigeria has a data privacy and protection law but doesn’t have a data quality guideline or law. This is a big concern for me as a statistician because it’s difficult to make decisions based on some of our data,” one participant lamented.

Some participants recognized their role in ensuring data management becomes a core part of their curricula, perhaps as a general course that students can take. This conversation led us to the topic of Artificial Intelligence (AI). I postulated that AI’s language models, in a country like Nigeria, will continue to output data that falls short of key data quality dimensions. A few participants, convinced that AI is here to stay, suggested their institutions urgently develop an AI policy or guideline.

Indigenous Approach to Evaluation

Participants co-creating their monitoring and evaluation framework

Evaluation rooted in culturally sensitive methodologies might be the solution to the two points mentioned above. Arguably, the evaluation market—if not international development itself—is heavily Westernized. So, how would Indigenous approaches, like the “Made in Africa” evaluation, thrive? Two participants explained why they advocated for the use of only qualitative methods in their field.

“Internationally, we developed questions to ask respondents about their lifestyle. We received two divergent opinions from the same respondents when we administered a survey and when we conducted an individual interview.”

Chilisa and Malunga, in their 2012 paper titled ‘Made in Africa Evaluation: Uncovering African Roots in Evaluation Theory and Practice’ presented at the Bellagio Conference, suggested conducting evaluations in African settings using localized knowledge tools and data collection methods while considering the adaptability of evaluation work to the lifestyles and needs of the African communities being evaluated. However, the practicality of using contextualized versus traditional methods might be a significant challenge.

Interestingly, there were long debates among participants on the scientific implications of relying on people’s narratives and using tailored sets of questions to elicit information and insights. I had warned them that we would inevitably get into a debate between social scientists and scientists from more quantitative fields. They asked which category I belonged to. Well, I am an evaluator, and I make judgments based on intuition rooted in local knowledge and global thinking—whatever that means!

Conclusion

Making informed decisions depends on several factors, including where the information is generated, the quality of that information, and the perspective we use to view the evidence. I am glad the leaders in the universities were able to contextualize this. Above all, they understood how their institutions are well-positioned to create change in this fast-growing field of evaluation.